%20(14).png)

You’re a builder facing a job too big for your tools. You could hire an apprentice. They’d use your tools but need constant supervision. Or hire a contractor. They bring their own tools, have their own specialised skillset, work independently, and report back when done. For big jobs, the contractor is better. You keep working while they handle their part.

That’s exactly the problem we faced building our Integration Builder Agent.

The agent generates Integration configurations; transforming provider/SaaS endpoints into Unified APIs and AI Tools, researching documentation, writing descriptions, discovering comprehensive API actions. But time-consuming subtasks kept blocking the main agent; either by requiring human interaction or awaiting long-running MCP tool calls.

We needed contractors, not apprentices. We needed specialist subagents that work autonomously in the background with their own tools and context, only reporting back when finished. This post walks through how we implemented async subagents using Cloudflare’s Durable Objects and native RPC, transforming our Integration Builder agent from a single-threaded worker into a parallel task orchestrator.

Our Integration Builder Agent generates Integration Configurations (in YAML) that map provider endpoints (like Hibob, Zendesk, HubSpot or Workday and hundreds more) to be easily consumed by B2B SaaS & AI Agents. Each configuration includes between 20-100+ actions with detailed endpoint descriptions, comprehensive action coverage discovered through documentation, and proper field mappings and authentication configs.

We hit three fundamental constraints:

Context Window Limits

Generating configs, testing them, and researching API actions consumes massive context. Testing alone requires passing entire configs (1K to 10K tokens each) back and forth repeatedly. Add documentation research, OpenAPI spec analysis, and competitor analysis, and the Integration Builder would hit token limits while managing 50+ operations, losing context about earlier work.

Manual Subagent Orchestration in Claude Code

Claude Code supports subagents natively, but they’re “apprentices” from our analogy. They use the parent agent’s tools and context, but require manual user interaction to trigger and retrieve results, all within the same conversation thread. The Integration Builder couldn’t fire-and-forget; it had to stop and wait for human intervention to orchestrate each sub-task.

Long-Running Jobs Make Manual Orchestration Impractical

Even if manual orchestration were acceptable, research tasks take too long. Improving descriptions means reading docs and analyzing examples. Longer jobs could require exhaustive research across documentation, SDKs, and competitor implementations. The main agent can focus on aggregating information from other tools or subagents, rather than waiting.

Claude Code CLI subagents are specialized AI assistants that operate in separate context windows with specific purposes. The main agent can invoke these subagents either automatically based on task descriptions or explicitly by mentioning them.

Pros

Built-in, zero setup, separate context windows preserve parent agent state

Cons

—dangerously-skip-permissions, the CC subagent needs to be manually triggeredThis “apprentice” model works well for human-in-the-loop workflows where users manually trigger subagents and review results. But it doesn’t fit autonomous use cases where the Integration Builder needs to delegate long-running research tasks, continue building other config components, and automatically integrate results when ready, without human intervention.

Our first production attempt: expose the subagent as an MCP tool that the Integration Builder could call via HTTP.

Pros:

Cons:

We needed “contractor” agents that could work autonomously in the background without blocking the main agent, handle research-intensive tasks like improving 20+ endpoint descriptions or discovering comprehensive API actions, maintain their own context and tools, and report back only when finished. To achieve this, we built specialist subagents as separate Durable Objects that the Integration Builder calls via native RPC through an MCP tool. Each subagent runs independently with its own autonomous loop, making its own tool calls and research decisions while the Integration Builder continues other work.

What is RPC? Remote Procedure Call lets one program call a function in another program as if it were local code. Instead of constructing HTTP requests and parsing responses, you simply call a method: stub.improveDescriptions({ config, provider }).

Traditional RPC frameworks (like JSON-RPC or gRPC) use HTTP, but Cloudflare’s Durable Object RPC uses an internal communication protocol. When both Workers run in the same isolate, calls are essentially function calls across process boundaries: zero network latency and no HTTP overhead. This enables true fire-and-forget delegation and persistent state that continues execution after returning a response, which HTTP’s request-response cycle cannot provide.

Implementation:

wrangler.toml that gives the Integration Builder access to the subagent Durable Object.env.SUBAGENT.get(providerId)stub.improveDescriptions({ config, provider })The Integration Builder delegates work via RPC, gets a task ID back immediately, and continues working. The subagent runs in its own autonomous loop—making its own tool calls, managing its own iterations, and making decisions about research depth. When complete, the Integration Builder retrieves and integrates the results.

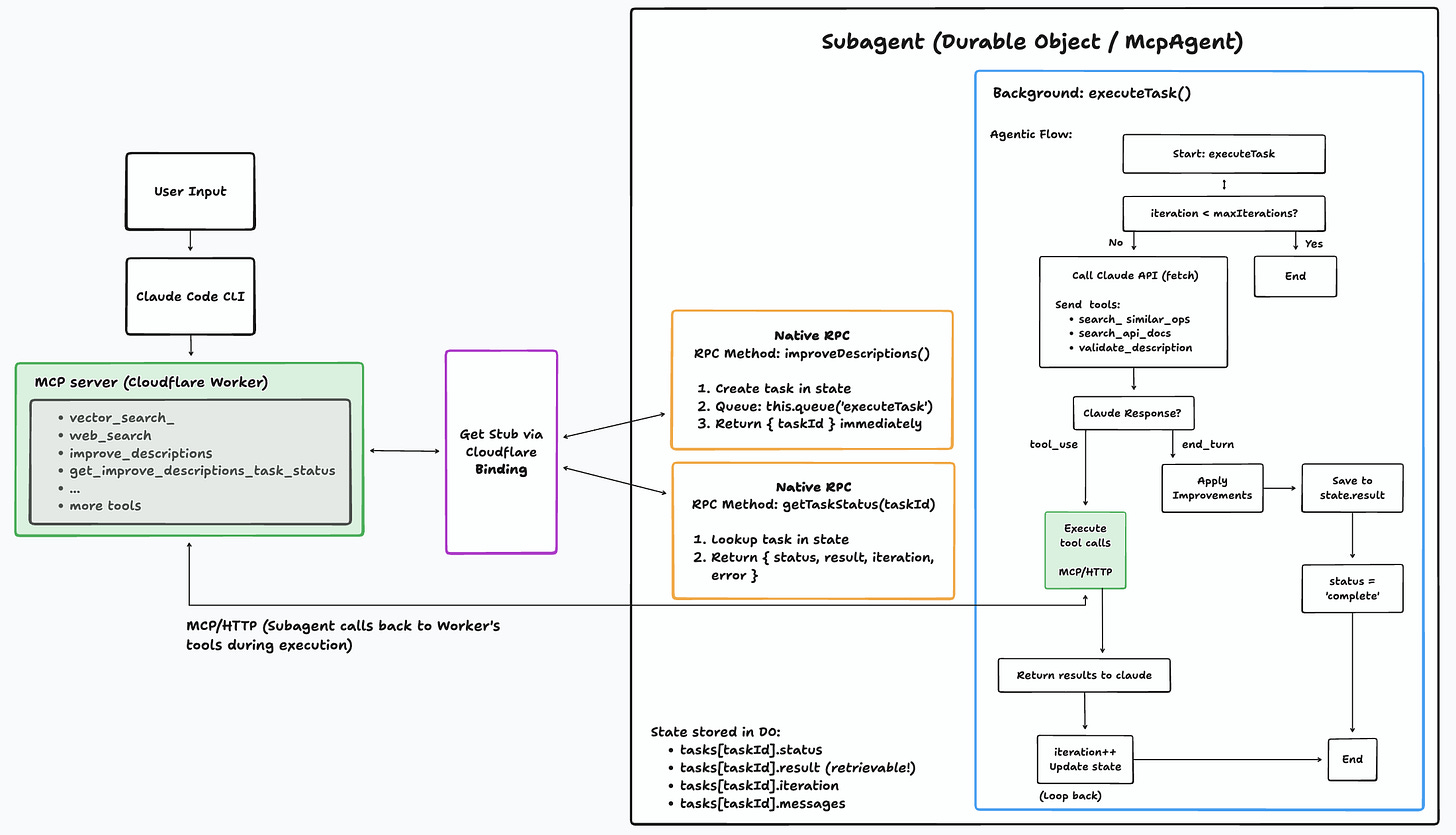

The following diagram shows the complete end-to-end flow when using the improve_descriptions tool, from the user’s initial request through Claude Code CLI to the final result retrieval. It illustrates how the Integration Builder delegates work to the subagent via native RPC, and how the subagent autonomously executes its agentic loop while calling back to the Integration Builder’s tools when needed.

Example scenario: The Integration Builder generates a Zendesk config with 25 operations but generic descriptions. It delegates to the Improve Descriptions subagent, which autonomously researches each endpoint in the background while the Integration Builder continues building auth configs and field mappings.

Key implementation details:

this.queue() which writes tasks to an internal queue and executes them in the backgroundWe expose two MCP tools to the Integration Builder:

improve_descriptions(config, provider) { taskId } immediatelyget_improve_descriptions_task_status(taskId, provider) The Integration Builder generates a config, delegates description improvement, and continues building other components. Meanwhile, the subagent autonomously researches 20+ operations: reading docs, analysing examples, making tool calls to the parent agent’s MCP server for vector search and web research. The Integration Builder can poll for results, retrieve the config if the task is complete and then integrate the improved descriptions.

The future may bring streaming responses or protocol extensions. For now, native RPC with Durable Object persistence gives us reliable, scalable subagent delegation.

Want to see it in action? Book a demo to watch our Claude Code-based Integration Builder Agent.

Building something similar? Reach out to ai@stackone.com - we'd love to chat!